Introduction

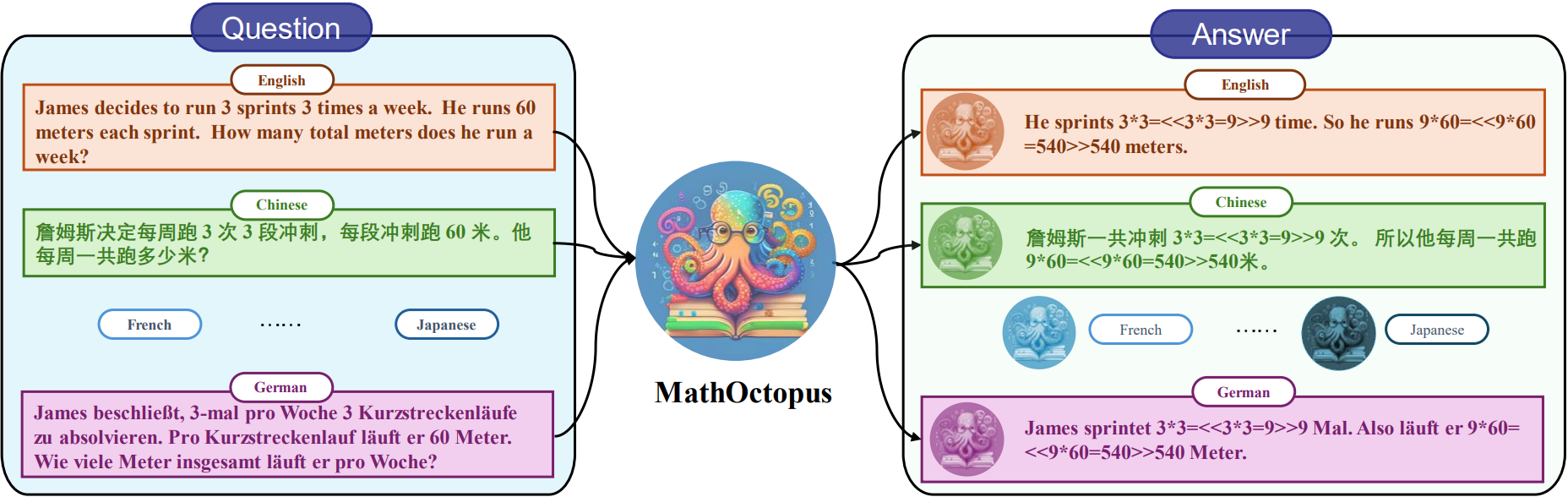

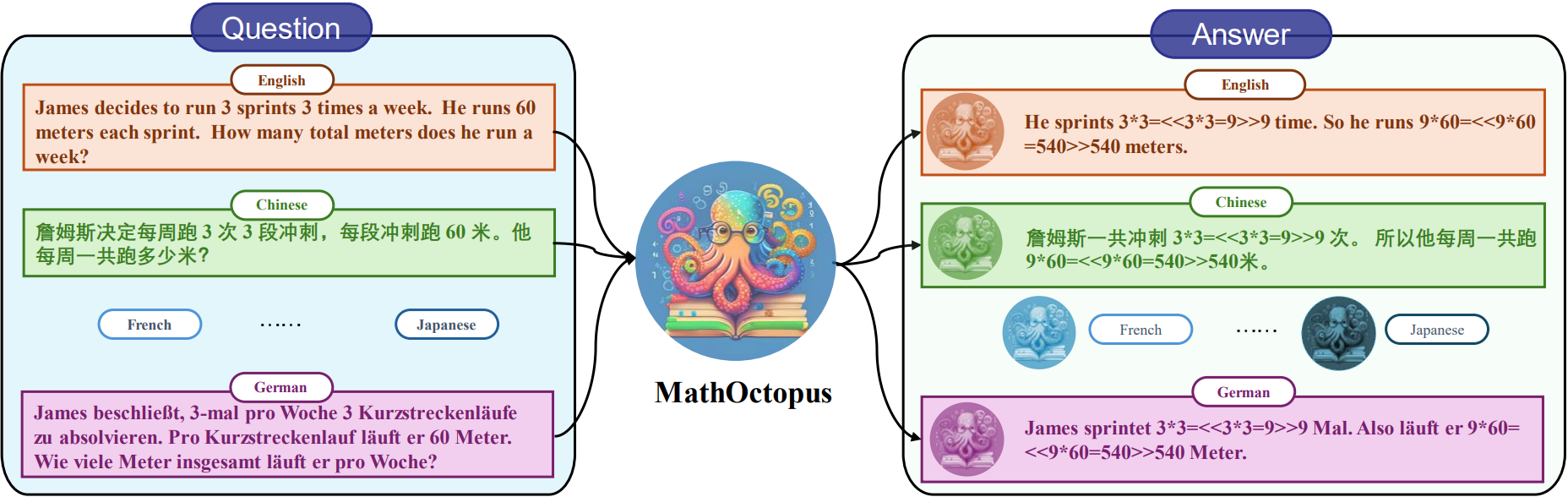

This work pioneers exploring and building powerful Multilingual Math Reasoning (xMR) LLMs. To accomplish this, we make the following works:

-

MGSM8KInstruct, the first multilingual math reasoning instruction dataset, encompassing ten distinct languages, thus addressing the issue of training data scarcity in xMR tasks.

-

MSVAMP, an out-of-domain xMR test dataset, to conduct a more exhaustive and comprehensive evaluation of the model’s multilingual mathematical capabilities.

-

MathOctopus, our effective Multilingual Math Reasoning LLMs, training with different strategies, which notably outperform conventional open-source LLMs and exhibit superiority over ChatGPT in few-shot scenarios.

MGSM8KInstruct

| Training Dataset |

En |

Sw |

Zh |

Bn |

De |

Es |

Fr |

Ja |

Ru |

Th |

Overall |

| MGSM8KInstruct |

7473 |

7472 |

7466 |

6539 |

7466 |

7470 |

7469 |

7471 |

7361 |

7473 |

73.6K |

MSVAMP

| Test Dataset |

En |

Sw |

Zh |

Bn |

De |

Es |

Fr |

Ja |

Ru |

Th |

Overall |

| MSVAMP |

1000 |

1000 |

1000 |

1000 |

1000 |

1000 |

1000 |

1000 |

1000 |

1000 |

10K |

Overall Results on MGSM

| 7B Model |

En |

Sw |

Zh |

Bn |

De |

Es |

Fr |

Ja |

Ru |

Th |

Overall |

| MathOctopusC |

52.0 |

23.6 |

31.6 |

18.8 |

38.0 |

39.2 |

36.4 |

27.2 |

33.6 |

21.6 |

32.2 |

| xRFT-MathOctopusC |

51.2 |

24.0 |

33.2 |

18.8 |

36.0 |

41.2 |

37.6 |

29.6 |

36.4 |

25.2 |

33.3 |

| MathOctopusP-LoRA |

30.4 |

15.2 |

23.6 |

10.4 |

22.8 |

24.8 |

26.4 |

18.0 |

22.0 |

14.8 |

20.8 |

| MathOctopusP |

52.4 |

39.2 |

38.4 |

28.8 |

44.8 |

42.4 |

43.6 |

36.0 |

39.6 |

34.4 |

40.0 |

| xRFT-MathOctopusP |

54.8 |

38.4 |

45.2 |

33.2 |

43.6 |

45.2 |

38.0 |

35.6 |

48.4 |

36.4 |

41.9 |

| 13B Model |

En |

Sw |

Zh |

Bn |

De |

Es |

Fr |

Ja |

Ru |

Th |

Overall |

| MathOctopusC |

56.4 |

27.2 |

39.2 |

24.0 |

47.6 |

49.6 |

47.6 |

40.4 |

42.0 |

24.8 |

39.9 |

| xRFT-MathOctopusC |

53.6 |

28.0 |

45.2 |

21.2 |

48.0 |

46.4 |

46.0 |

35.2 |

45.6 |

28.8 |

39.8 |

| MathOctopusP |

53.2 |

42.8 |

48.8 |

35.2 |

44.4 |

48.0 |

48.4 |

43.2 |

47.6 |

46.8 |

45.8 |

| xRFT-MathOctopusP |

51.6 |

46.0 |

51.2 |

42.0 |

49.2 |

53.2 |

49.6 |

39.6 |

47.6 |

46.0 |

47.6 |

| 30-34B Model |

En |

Sw |

Zh |

Bn |

De |

Es |

Fr |

Ja |

Ru |

Th |

Overall |

| MathOctopusC |

55.6 |

24.4 |

36.0 |

19.2 |

40.4 |

51.2 |

44.4 |

27.2 |

37.2 |

21.6 |

35.7 |

| xRFT-MathOctopusC |

53.6 |

27.6 |

34.4 |

19.2 |

47.2 |

47.6 |

44.8 |

30.8 |

38.8 |

22.8 |

36.7 |

| MathOctopusP |

56.4 |

46.8 |

52.0 |

35.2 |

47.2 |

53.2 |

48.0 |

39.2 |

45.6 |

41.2 |

46.5 |

| xRFT-MathOctopusP |

51.6 |

47.2 |

52.4 |

37.6 |

51.2 |

52.8 |

44.4 |

41.6 |

50.0 |

47.6 |

47.6 |

Overall Results on MSVAMP

| 7B Model |

En |

Sw |

Zh |

Bn |

De |

Es |

Fr |

Ja |

Ru |

Th |

Overall |

| MathOctopusC |

49.2 |

36.6 |

43.6 |

30.2 |

48.6 |

46.8 |

46.4 |

42.5 |

46.7 |

34.0 |

42.5 |

| xRFT-MathOctopusC |

49.9 |

37.7 |

43.3 |

32.9 |

46.5 |

47.6 |

47.3 |

42.7 |

46.6 |

36.2 |

43.1 |

| MathOctopusP-LoRA |

30.4 |

15.2 |

23.6 |

10.4 |

22.8 |

24.8 |

26.4 |

18.0 |

22.0 |

14.8 |

20.8 |

| MathOctopusP |

46.5 |

40.1 |

42.5 |

29.1 |

43.5 |

45.4 |

46.0 |

42.5 |

45.4 |

35.7 |

41.7 |

| xRFT-MathOctopusP |

46.8 |

42.3 |

43.2 |

32.8 |

43.1 |

44.5 |

45.3 |

43.2 |

42.1 |

40.5 |

42.4 |

| 13B Model |

En |

Sw |

Zh |

Bn |

De |

Es |

Fr |

Ja |

Ru |

Th |

Overall |

| MathOctopusC |

56.6 |

40.4 |

49.0 |

30.3 |

50.9 |

54.2 |

54.7 |

46.3 |

52.4 |

35.7 |

47.1 |

| xRFT-MathOctopusC |

52.9 |

41.9 |

49.2 |

34.1 |

50.5 |

52.8 |

51.5 |

45.8 |

50.2 |

35.7 |

46.5 |

| MathOctopusP |

50.7 |

43.4 |

42.6 |

31.8 |

48.4 |

49.4 |

50.6 |

41.1 |

46.9 |

39.3 |

44.4 |

| xRFT-MathOctopusP |

44.6 |

43.4 |

46.4 |

34.2 |

47.7 |

48.2 |

49.9 |

43.1 |

48.2 |

39.5 |

44.5 |

| 30-34B Model |

En |

Sw |

Zh |

Bn |

De |

Es |

Fr |

Ja |

Ru |

Th |

Overall |

| MathOctopusC |

51.5 |

42.1 |

46.2 |

23.2 |

50.5 |

52.1 |

52.9 |

42.2 |

50.5 |

33.4 |

44.5 |

| xRFT-MathOctopusC |

48.1 |

42.8 |

43.6 |

23.3 |

48.7 |

50.0 |

48.9 |

43.4 |

44.6 |

35.5 |

42.9 |

| MathOctopusP |

56.4 |

46.8 |

52.0 |

35.2 |

47.2 |

53.2 |

48.0 |

39.2 |

45.6 |

41.2 |

46.5 |

| xRFT-MathOctopusP |

48.0 |

42.3 |

46.1 |

36.2 |

47.5 |

48.5 |

48.3 |

45.8 |

47.2 |

41.2 |

45.1 |

MathOctopus in English

| Models |

GSM8K |

SVAMP |

| LLaMA 2-7B |

42.4 |

38.3 |

| MathOctopusP-7B |

49.3 |

46.8 |

| MathOctopusC-7B |

50.8 |

49.3 |

| LLaMA 2-13B |

51.0 |

50.9 |

| MathOctopusP-13B |

55.5 |

52.1 |

| MathOctopusC-13B |

56.6 |

56.6 |

| LLaMA 1-33B |

50.0 |

49.0 |

| MathOctopusP-33B |

56.0 |

52.5 |

| MathOctopusC-33B |

53.7 |

51.5 |

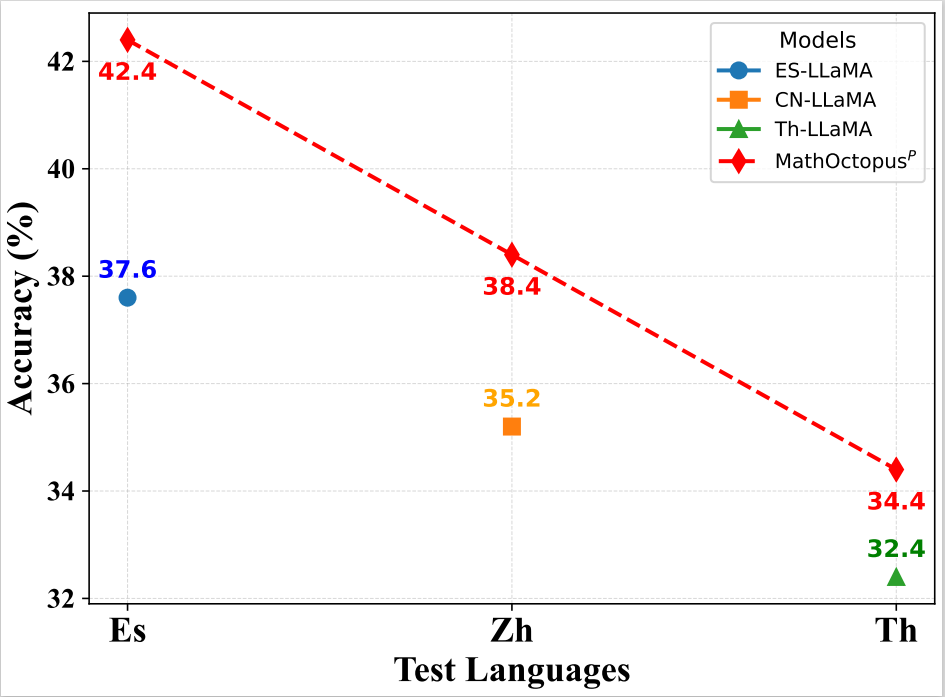

Multilingual SFT can generally benefit Monolingual SFT

Above figure separately illustrates the test results of several models in their respective training languages. We observe that our model still surpasses the results of the monolingual SFT models in their native training languages. This suggests that, at least in the task of math reasoning, multilingual SFT can be considered a superior training strategy to monolingual SFT, effortlessly elevating the model’s performance in its native language.